The ExaWorks SDK: Technologies for Composable and Scalable HPC Workflows

22 Apr 2021 - Dan Laney (LLNL), Dong Ahn (LLNL), Kyle Chard (ANL), Shantenu Jha (BNL), Tom Uram (ANL), Justin Wozniak (ANL)

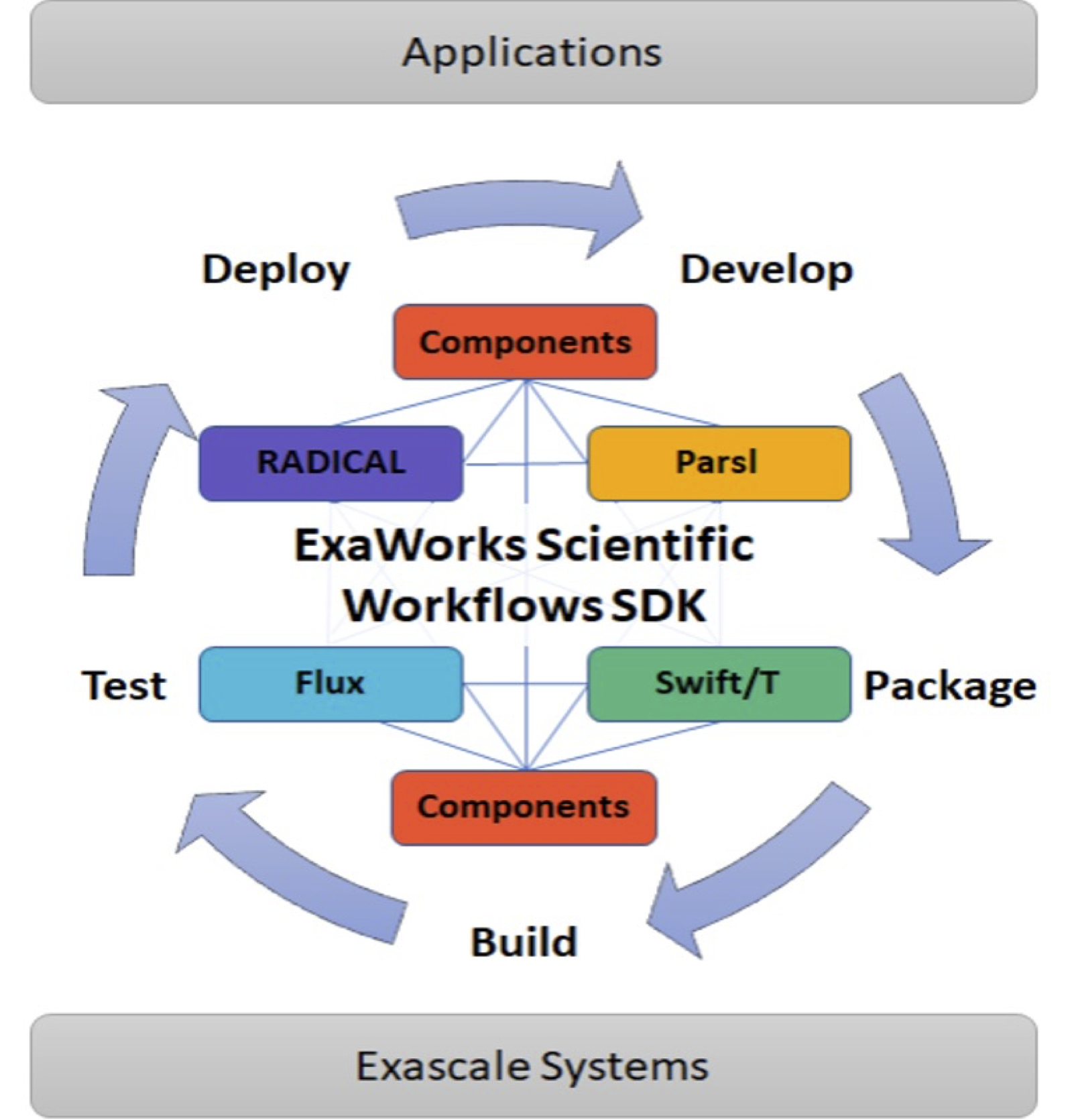

ExaWorks is a new Exascale Computing Project (ECP) effort to curate a community-designed Software Development Kit (SDK) of composable and reusable workflow technologies that can be incorporated into existing workflow systems and bespoke ECP application workflows. We are working with the workflow community to break down barriers between workflow management systems, initiating a move towards increased sharing of low-level functionality, and increasing the robustness and scalability of the crucial workflow components relied upon by ECP applications.

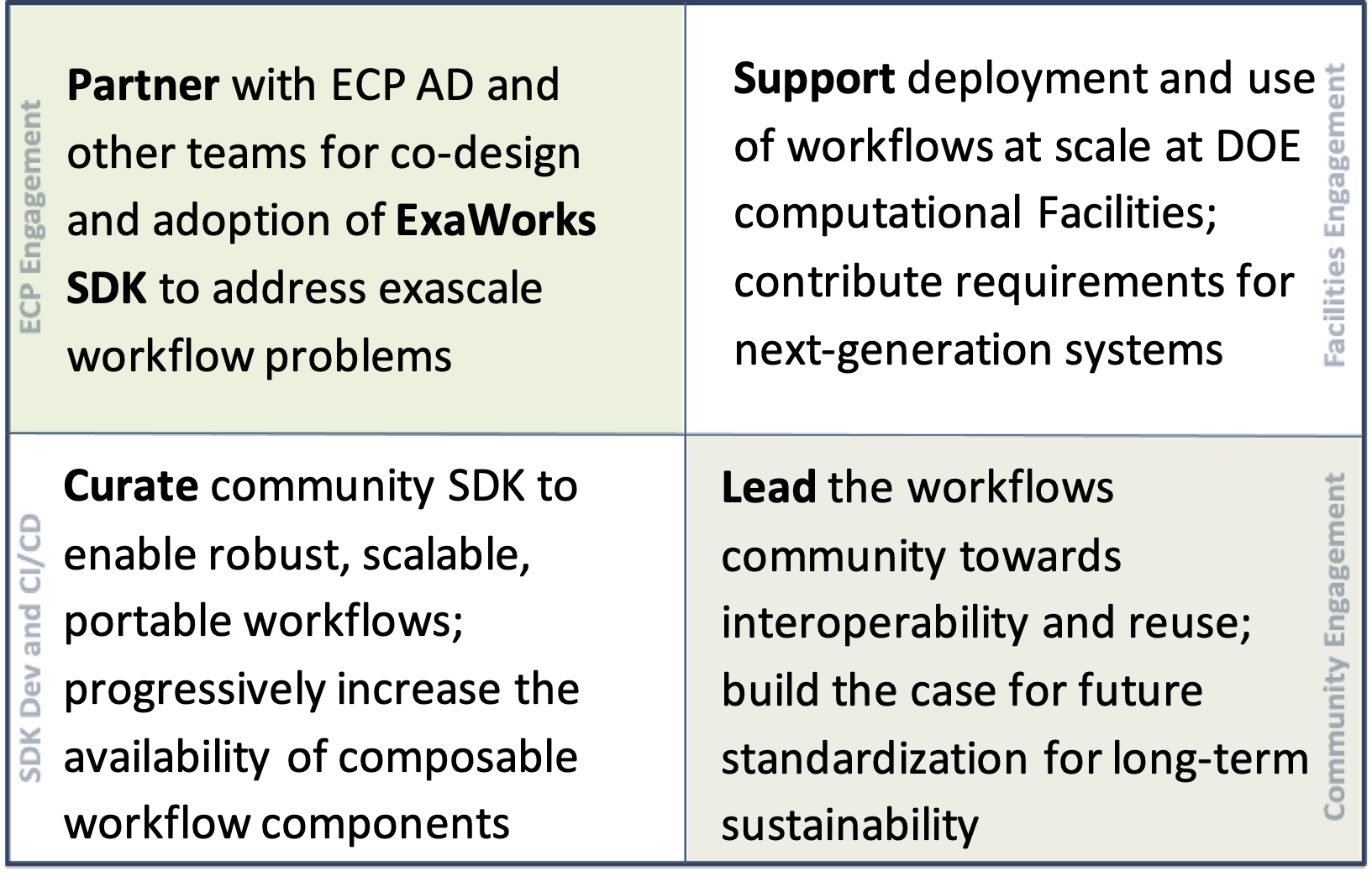

Our approach is organized around four thrust areas: partnering with applications teams in ECP, working with the workflows community to create a well-tested and easily-deployable workflows toolkit, supporting facilities in ensuring that the SDK is available to users, and providing a nucleation point for the workflows community to increase the interoperability of workflow tools and systems.

An Open Source Software Development Kit for Workflows

ExaWorks is leveraging the E4S framework in ECP as a starting point for creating community standards

and SDK policies. Our approach emphasizes progressive levels of interoperability:

Level 0: SDK Technologies can be packaged together

Level 1: Break down vertical silos and leverage capabilities via component interfaces or pairwise integrations

Level 2: Community developed and supported component APIs

Our initial SDK technologies are the Parsl parallel programming and dataflow library, the RADICAL-Cybertools middle-ware building blocks for workflows, the Swift/T workflow language and runtime, the Balsam workflow manager, and the Flux next-generation workload manager. Each of these technologies has multiple internal abstractions common to many workflow tool sets: job allocation layers, task launch APIs, dependency graph data structures, and orchestration/messaging capabilities. Our goal is to gradually increase the Level 1 interoperability of the SDK via cross-integration of these tools, and leveraging the knowledge gained from those efforts to propose common APIs and libraries for some of these capabilities that can be shared by multiple workflow systems.

A second feature of our SDK is that most of the technologies can be adopted incrementally by ECP applications teams. For example, we have had success integrating the Flux scheduler into an ECP application workflow to increase throughput of jobs with only minimal modifications to the existing workflow scripts.

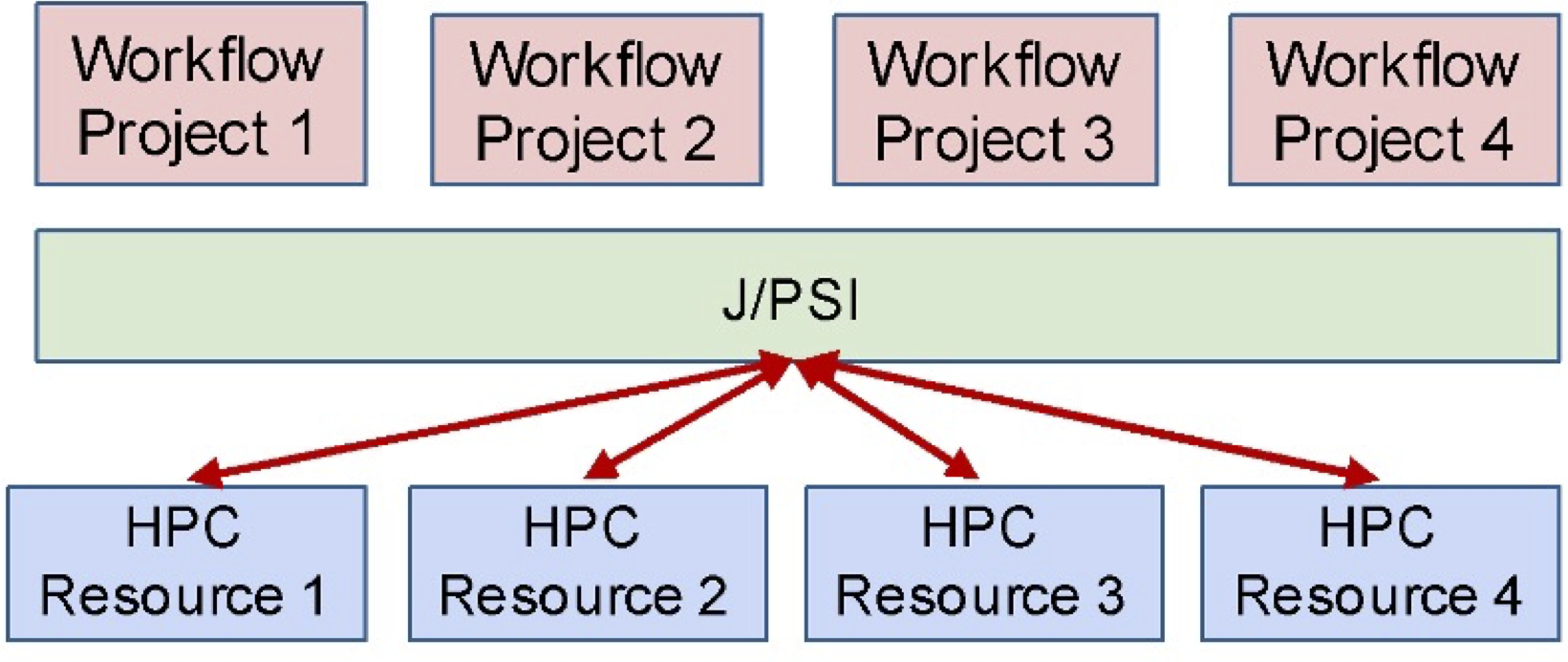

J/PSI Our First Shared Component

Our first shared API and capability is J/PSI, the Job / Portable Submission Interface. Our initial focus for J/PSI is to provide a common API for obtaining allocations on compute resources and monitoring and managing those jobs. J/PSI meets a common need across workflows, whether based on workflow management systems, or based on custom scripts. Many workflows grab a set of nodes as a single allocation, and then launch multiple jobs into the allocation. J/PSI represents low hanging fruit: SDK components and several ECP application teams have developed varying levels of abstractions to perform this basic function.

J/PSI is fully open source, and the development is open to the community. Anyone that would like to contribute will find our draft specification and prototype implementations at github.com/ExaWorks.

Get Involved!

The ExaWorks team will release early versions of our SDK in the coming months, and will work with interested AD teams to provide advice and expertise to understand and solve workflow problems. We invite all interested developers to join our slack channel at exaworks.slack.com, and to join our activities on GitHub.

We are interested in hearing about your workflow requirements and to work with you to discover if ExaWorks technologies, or other open source workflow capabilities might be useful in achieving your performance, automation, and scalability goals.

This work was performed under the auspices of the U.S. Department of Energy by Lawrence Livermore National Laboratory under contract DE-AC52-07NA27344. Lawrence Livermore National Security, LLC